Blog

Micro-services asynchronous communication— AWS SQS

- September 3, 2022

- Posted by: techjediadmin

- Category: AWS Cloud Applications Cloud Computing

Asynchronous messaging

We have point-to-point (1 to 1 message) and publisher-subscriber (1 to many messages) patterns typically used in messaging systems for async communication.

Point-to-Point: the message is sent from one application(producer) to another application(consumer). Even if there are more consumers listening, one of them will get the message. Also, message delivery is guaranteed by the queue.

Publisher-Subscriber: the message is sent from one application(producer) to many applications (consumers). Message delivery is not guaranteed.

What is SQS?

Amazon SQS is the abbreviation of the popular AWS service ‘simple queuing service’. It gives a secure, durable queue — mainly used to integrate and decouple distributed software components. It clearly falls under the point-to-point message system.

With queues, we have asynchronous communication, allowing the different systems to do their work at their own pace and not be affected by other systems. AWS makes it easy to use SQS and decouple different components in your system.

When to use SQS?

Let us see this with a real-time example. Consider you are working for an OTT company(like Netflix/Prime/Hotstar). You are assigned the task to build a video upload and processing system. Your application(website) has a file upload component working.

Requirement: As soon as the video is uploaded, you want to process the video (time-consuming — job from minutes to hours) and notify the user(or other systems — like catalog, search) of the availability of the video for viewing. Here, we have video upload & processing (both time-consuming tasks).

The traditional execution model of the upload component triggering video-processing and waiting for it to complete and return the status to the customer(synchronous mode) wastes a lot of resources and will also have scaling concerns.

Solution: We could use SQS to decouple these 2 systems (upload & processing). Once the file transfer is completed, the ‘Upload’ component can send a message to SQS Queue And return it to the user. The message can have video details like file location and some metadata for the video to be used in processing.

On the other end, we can have a scheduler system always listening to the queue, and on the arrival of a new video, it can trigger a job to do the processing and regularly check the status and maintains it. The front-end systems can call this scheduler separately to get updates. Here, both upload and processing systems can work and scale independently.

Coding time:

Let’s see a python sample for sending and reading messages in SQS with AWS SDK in python language.

Send message: Send a Message to a Queue (To be used in the upload system)

import boto3# Create SQS client

sqs = boto3.client(‘sqs’)

queue_url = ‘SQS_QUEUE_URL’def send_message_sqs(message):

# Send a message to SQS queue

response = sqs.send_message(

QueueUrl=queue_url,

DelaySeconds=10,

MessageAttributes={

‘Video Title’: {

‘DataType’: ‘String’,

‘StringValue’: ‘TITLE1’

},

‘Tags’: {

‘DataType’: ‘String’,

‘StringValue’: ‘TAG1, TAG2’

}

},

MessageBody=(‘FILE_PATH(<<S3 location of file>>)’)

) print('Message id for tracking : ' + response[‘MessageId’])

return response[‘MessageId’]

Receive and Delete Messages from a Queue (To be used by scheduler/processing system)

def receive_message_sqs():

# Receive a message from SQS queue

response = sqs.receive_message(

QueueUrl=queue_url,

MaxNumberOfMessages=1,

MessageAttributeNames=[‘All’],

VisibilityTimeout=0,

WaitTimeSeconds=0

) message = response[‘Messages’][0]

receipt_handle = message[‘ReceiptHandle’] # Delete received a message from queue

response = sqs.delete_message(

QueueUrl=queue_url,

ReceiptHandle=receipt_handle

)

print(‘Received and deleted message: %s’ % message)

Why should we delete messages?

SQS works in a point-to-point reliable messaging system. To ensure this, we need to maintain the messages in the queue unless the consumer of the message confirms it has read it. This is done with the delete_message API in SQS.

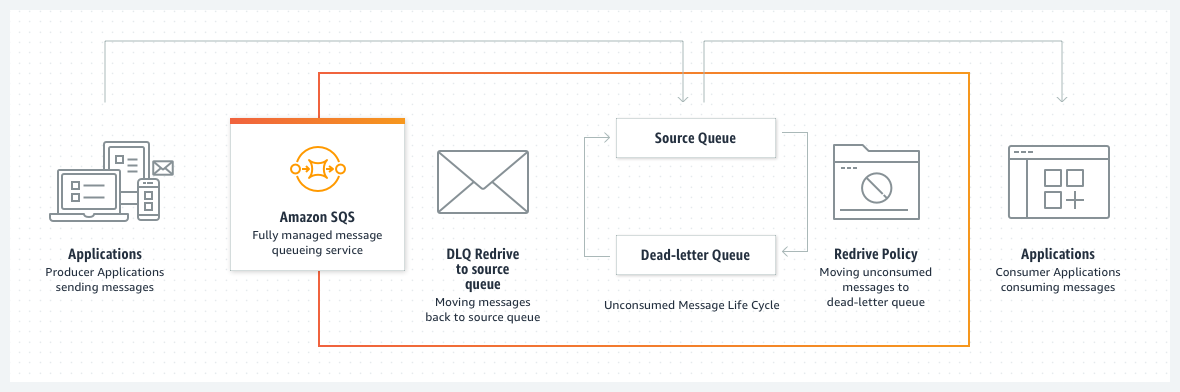

What else you should know — Dead letter Queues?

Dead letter queue is a special concept you should be aware of when using SQS. Technically speaking it is another queue, but differs in how it is configured and used. When processing a message from a queue you might find something wrong and not be able to consume the message. You will hit this case very often, as we are dealing with 2 independent systems, which are developed and evolved. For example, messages with a different format from expectations, messages with missing required items, and so on. DLQ is our rescue during these undesirable situations. We should transfer such messages to another queue and investigate the cause of the failure later.

AWS DLQ:

It is very easy to configure DLQs in AWS SQS. You can create a separate queue and add it as a DLQ in the configuration. We build some developer tools to read and analyze the failed messages. We will have alarms/notification mechanisms set up for the notify team when we have some message pushed to DLQ.

Read Similar Blogs:

Databases — Running multi-line scripts from command line

Google Authenticator

How does Serverless Architecture work?

Courses

Data Structure & Algorithms for Interviews